Mello Addresses US House Panel on Advancing AI in Health Care

Mello Addresses US House Panel on Advancing AI in Health Care

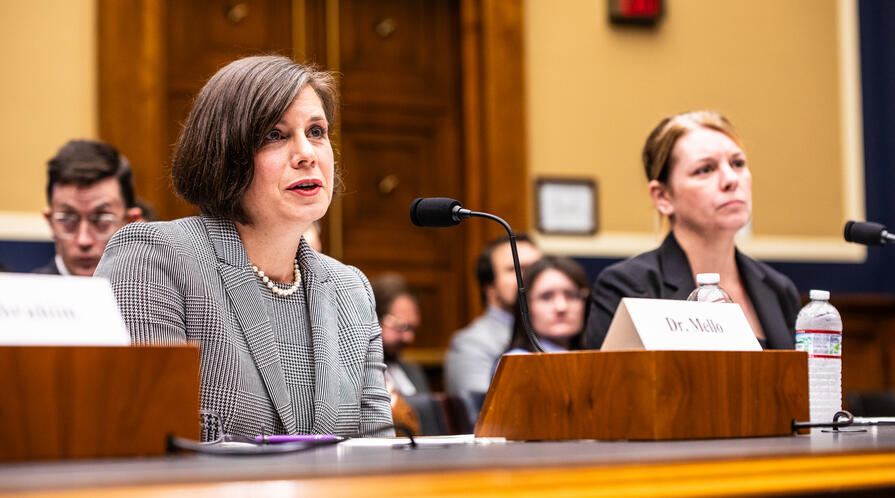

Stanford Health Policy's Michelle Mello testifies about opportunities to advance American health care through the use of AI technologies.

SHP's Michelle Mello, JD, PhD, brought an optimistic outlook to a U.S. House committee hearing on advancing artificial intelligence (AI) in health care, highlighting its enormous potential while urging lawmakers to recognize the government’s critical role in safeguarding both patients and health care organizations.

"I’m part of a group of data scientists, physicians and ethicists at Stanford University that helps govern how Stanford AI tools are used in health care at our facilities," Mello, a professor of health policy and of law, said in her testimony before the U.S. House Committee on Energy & Commerce subcommittee on health on Sept. 3, 2025.

“I’m enthusiastic about health care AI. There are so many intractable problems that it will help solve,” she said. “But AI presents both a historic opportunity and a serious risk and although improperly designed regulations may hinder innovation, there is a critical role for the government in ensuring the conditions for innovations to translation into AI adoption."

There is no shortage of innovation in the AI space, Mello said, but its adoption has been slow because of what experts call a “foundational trust deficit.”

In her prepared testimony, Mello said there were four areas where policy interventions could help build that trust and spread innovation and adoption. She listed them as follows:

- Ensure that the entities that develop and use AI adequately assess, disclose, and mitigate the risks of these tools. Health care organizations and health insurers should be required to show that they have an AI governance process in place that meets certain standards. AI developers should be required to document and disclose key pieces of information about their products’ design and performance.

- Support independent research on how AI tools perform in practice. Such research can help health care organizations and insurers answer important questions about where investments in AI solutions can generate the most benefit and to minimize risks. It also ensures that this knowledge is disseminated broadly.

- Modify health care reimbursement policies to better support adoption and monitoring of effective AI tools. Many AI tools will not save health care organizations money and monitoring them properly can be costly.

Address shortcomings in the Food and Drug Administration’s statutory framework to make the agency a more constructive partner in AI development and adoption. In some areas, the agency’s authority doesn’t go far enough; in others, it burdens developers with an antiquated regulatory framework that did not anticipate the AI revolution.

“AI holds enormous promise for improving health care, but its adoption is being slowed by a fundamental trust deficit,” Mello concluded, before taking questions from committee members. “By taking practical steps now, Congress can help close that gap, make health care providers and the public more excited to receive the products coming out of industry—and ensure that innovation truly reaches the bedside.”

Watch Mello's Testimony